OPERANT CONDITIONING

Operant conditioning is the use of consequences to modify the occurrence and form of behavior. Operant conditioning is distinguished from Pavlovian conditioning in that operant conditioning deals with the modification of "voluntary behavior" through the use of consequences, while Pavlovian conditioning deals with the conditioning of behavior so that it occurs under new antecedent conditions.

REINFORCEMENT, PUNISHMENT, AND EXTINCTION

Reinforcement and punishment, the core tools of operant conditioning, are either positive (delivered following a response), or negative (withdrawn following a response). This creates a total of four basic consequences, with the addition of a fifth procedure known as extinction (i.e. no change in consequences following a response).

It's important to note that organisms are not spoken of as being reinforced, punished, or extinguished; it is the response that is reinforced, punished, or extinguished. Additionally, reinforcement, punishment, and extinction are not terms whose use are restricted to the laboratory. Naturally occurring consequences can also be said to reinforce, punish, or extinguish behavior and are not always delivered by people.

.Reinforcement is a consequence that causes a behavior to occur with greater frequency.

.Punishment is a consequence that causes a behavior to occur with less frequency.

.Extinction is the lack of any consequence following a response. When a response is inconsequential, producing neither favorable nor unfavorable consequences, it will occur withless frequency.

Four contexts of operant conditioning: Here the terms "positive" and "negative" are not used in their popular sense, but rather: "positive" refers to addition, and "negative" refers to subtraction. What is added or subtracted may be either reinforcement or punishment. Hence positive punishment is sometimes a confusing term, as it denotes the addition of punishment (such as spanking or an electric shock), a context that may seem very negative in the lay sense. The four procedures are:

. Positive reinforcement occurs when a behavior (response) is followed by a favorable stimulus (commonly seen as pleasant) that increases the frequency of that behavior. In the Skinner box experiment, a stimulus such as food or sugar solution can be delivered when the rat engages in a target behavior, such as pressing a lever.

. Negative reinforcement occurs when a behavior (response) is followed by the removal of an aversive stimulus (commonly seen as unpleasant) thereby increasing that behavior's frequency. In the Skinner box experiment, negative reinforcement can be a loud noise continuously sounding inside the rat's cage until it engages in the target behavior, such as pressing a lever, upon which the loud noise is removed.

. Positive punishment (also called "Punishment by contingent stimulation") occurs when a behavior (response) is followed by an aversive stimulus, such as introducing a shock or loud noise, resulting in a decrease in that behavior.

. Negative punishment (also called "Punishment by contingent withdrawal") occurs when a behavior (response) is followed by the removal of a favorable stimulus, such as taking away a child's toy following an undesired behavior, resulting in a decrease in that behavior.

Also:

. Avoidance learning is a type of learning in which a certain behavior results in the cessation of an aversive stimulus. For example, performing the behavior of shielding one's eyes when in the sunlight (or going indoors) will help avoid the punishment of having light in one's eyes.

. Extinction occurs when a behavior (response) that had previously been reinforced is no longer effective. In the Skinner box experiment, this is the rat pushing the lever and being rewarded with a food pellet several times, and then pushing the lever again and never receiving a food pellet again. Eventually the rat would cease pushing the lever.

. Non-contingent Reinforcement is a procedure that decreases thefrequency of a behavior by both reinforcing alternative behaviors and extinguishing the undesired behavior. Since the alternative behaviors are reinforced, they increase in frequency and therefore compete for time with the undesired behavior.

THORNDIKE'S LAW OF EFFECT

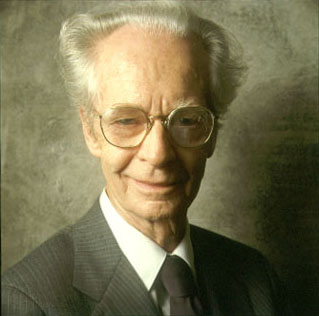

Operant conditioning, sometimes called instrumental conditioning or instrumental learning, was first extensively studied by Edward L. Thorndike (1874-1949), who observed the behavior of cats trying to escape from home-made puzzle boxes.[2] When first constrained in the boxes, the cats took a long time to escape. With experience, ineffective responses occurred less frequently and successful responses occurred more frequently, enabling the cats to escape in less time over successive trials. In his Law of Effect, Thorndike theorized that successful responses, those producing satisfying consequences, were "stamped in" by the experience and thus occurred more frequently. Unsuccessful responses, those producing annoying consequences, were stamped out and subsequently occurred less frequently. In short, some consequences strengthened behavior and some consequences weakened behavior. B.F. Skinner (1904-1990) formulated a more detailed analysis of operant conditioning based on Operant conditioning, sometimes called instrumental conditioning or instrumental learning, was first extensively studied by Edward L. Thorndike (1874-1949), who observed the behavior of cats trying to escape from home-made puzzle boxes.[2] When first constrained in the boxes, the cats took a long time to escape. With experience, ineffective responses occurred less frequently and successful responses occurred more frequently, enabling the cats to escape in less time over successive trials. In his Law of Effect, Thorndike theorized that successful responses, those producing satisfying consequences, were "stamped in" by the experience and thus occurred more frequently. Unsuccessful responses, those producing annoying consequences, were stamped out and subsequently occurred less frequently. In short, some consequences strengthened behavior and some consequences weakened behavior. B.F. Skinner (1904-1990) formulated a more detailed analysis of operant conditioning based on reinforcement, punishment, and extinction. Following the ideas of Ernst Mach, Skinner rejected Thorndike's mediating structures required by "satisfaction" and constructed a new conceptualization of behavior without any such references. Moreover, Thorndike's work with puzzle boxes produced no meaningful data to be studied other than a measure of escape times. So while experimenting with some homemade feeding mechanisms Skinner invented the operant conditioning chamber which allowed him to measure rate of response as a key dependent variable using a cumulative record of lever presses or key pecks.

Operant Conditioning vs Fixed Action Patterns

Skinner's construct of instrumental learning is contrasted with what Nobel Prize winning biologist Konrad Lorenz termed "fixed action patterns," or reflexive, impulsive, or instinctive behaviors. These behaviors were said by Skinner and others to exist outside the parameters of operant conditioning but were considered essential to a comprehensive analysis of behavior.

In dog training, the use of the prey drive, particularly in training working dogs, detection dogs, etc., the stimulation of these fixed action patterns, relative to the dog's predatory instincts, are the key to producing very difficult yet consistent behaviors, and in most cases, do not involve operant, classical, or any other kind of conditioning. While evolutionary processes shaped these fix action patterns, the patterns themselves remained stable long enough to be shaped by the long time span necessary for evolution because of their survival function (i.e., operant conditioning).

According to the laws of operant conditioning, any behavior that is consistently rewarded, every single time, will extinguish at a faster rate while intermittently reinforcing behavior leads to more stable rates of behavior that are relatively more resistant to extinction. Thus, in detection dogs, any correct behavior of indicating a "find," must always be rewarded with a tug toy or a ball throw early on for initial acquisition of the behavior. Thereafter, fading procedures, in which the rate of reinforcement is "thinned" (not every response is reinforced)are introduced, switching the dog to an intermittentschedule of reinforcement, which is more resistant to instances of non-reinforcement.

Nevertheless, some trainers are now using the prey drive to train pet dogs and find that they get far better results in the dogs' responses to training than when they only use the principles of operant conditioning which, according to Skinner and his students Keller and Marian Breland (who invented clicker training), break down when strong instincts are at play.

Criticisms

Thorndike's law of effect specifically requires that a behavior be followed by satisfying consequences for learning to occur. There are, however, cases in which learning can be shown to occur without good or bad effects following the behavior. For instance, a number of experiments examining the phenomenon of latent learning[5][6][7][8] showed that a rat needn't receive a satisfying reward (food, if hungry; water, if thirsty) in order to learn a maze; learning that becomes apparent immediately after the desired reward is introduced.

A different experiment, in humans, showed that punishing the correct behavior may actually cause it to be more frequently taken (i.e. stamp it in)[9]. Subjects are given a number of pairs of holes on a large board and required to learn which hole to poke a stylus through for each pair. If the subjects receive an electric shock for punching the correct hole, they learn which hole is correct more quickly than subjects who receive an electric shock for punching the incorrect hole.

AVOIDANCE LEARNING

Avoidance training belongs to negative reinforcement schedules. The subject learns that a certain response will result in the termination or prevention of an aversive stimulus. There are two kinds of commonly used experimental settings: discriminated and free-operant avoidance learning.

Discriminated Avoidance Learning

In discriminated avoidance learning, a novel stimulus such as a light or a tone is followed by an aversive stimulus such as a shock (CS-US, similar toclassical conditioning). During the first trials (called escape-trials) the animal usually experiences both the CS and the US, showing the operant response to terminate the aversive US. By the time, the animal will learn to perform the response already during the presentation of the CS thus preventing the aversive US from occurring. Such trials are called avoidance trials.

Free-Operant Avoidance Learning

In this experimental session, no discrete stimulus is used to signal the occurrence of the aversive stimulus. Rather, the aversive stimulus (mostly shocks) are presented without explicit warning stimuli. There are two crucial time intervals determining the rate of avoidance learning. This first one is called the S-S-interval (shock-shock-interval). This is the amount of time which passes during successive presentations of the shock (unless the operant response is performed). The other one is called the R-S-interval (response-shock-interval) which specifies the length of the time interval following an operant response during which no shocks will be delivered. Note that each time the organism performs the operant response, the R-S-interval without shocks begins newly.

TWO-PROCESS THEORY OF AVOIDANCE

This theory was originally established to explain learning in discriminated avoidance learning. It assumes two processes to take place. a) Classical conditioning of fear. During the first trials of the training, the organism experiences both CS and aversive US(escape-trials). The theory assumed that during those trials classical conditioning takes place by pairing the CS with the US. Because of the aversive nature of the US the CS is supposed to elicit a conditioned emotional reaction (CER) - fear. In classical conditioning, presenting a CS conditioned with an aversive US disrupts the organism's ongoingbehavior. b) Reinforcement of the operant response by fear-reduction. Because during the first process, the CS signaling the aversive US has itself become aversive by eliciting fear in the organism, reducing this unpleasant emotional reaction serves to motivate the operant response. The organism learnsto make the response during the US, thus terminating the aversive internal reaction elicited by the CS. An important aspect of this theory is that the term "Avoidance" does not really describe what the organism is doing. It does not "avoid" the aversive US in the sense of anticipating it. Rather the organism escapes an aversive internal state, caused by the CS.

One of the practical aspects of operant conditioning with relation to animal training is the use of shaping (reinforcing successive approximations and not reinforcingbehavior past approximating), as well as chaining.

|